Test

The post Test appeared first on MyLifeIsMyMessage.net.

Test

The post Test appeared first on MyLifeIsMyMessage.net.

The adapter failed to transmit message going to send port “sp_Test_SFTP” with URL “sftp://sftp.mycompany.com:22/%MessageID%.xml”. It will be retransmitted after the retry interval specified for this Send Port. Details:”System.IO.FileLoadException: Could not load file or assembly ‘WinSCPnet, Version=1.2.10.6257, Culture=neutral, PublicKeyToken=2271ec4a3c56d0bf’ or one of its dependencies. General Exception (Exception from HRESULT: 0x80131500)

File name: ‘WinSCPnet, Version=1.2.10.6257, Culture=neutral, PublicKeyToken=2271ec4a3c56d0bf’ —> System.Exception: SFTP adapter requires WinSCP to be installed. Please refer http://go.microsoft.com/fwlink/?LinkID=730458&clcid=0x409 . —> System.IO.FileNotFoundException: Could not load file or assembly ‘file:///C:\Windows\Microsoft.Net\assembly\GAC_MSIL\Microsoft.BizTalk.Adapter.Sftp\v4.0_3.0.1.0__31bf3856ad364e35\WinSCPnet.dll’ or one of its dependencies. The system cannot find the file specified.

I think BizTalk requires a specific older version of WinSCP and you can’t just download the latest one.

This error could also occur if you didn’t copy the file to the proper directly.

I found Michael Stephenson’s BizTalk 2016 SFTP blog, and it had a nice PowerShell script.

I just had to

1) Change directory names (I had a Software directory similar to him, but I had to create a WinSCP subdirectory under it)

2) Then PowerShell with the “Run as Admin” option.

Then the script ran fine, I restarted BizTalk Host Instance, and it got past this error, on to other errors that I’ll blog about in the near future.

By the way, I was able to run in a 64-bit host with no problem. I remember that in older version of BizTalk the FTP adapter ran only in 32-bit hosts.

The post BizTalk 2016 – Could not load file or assembly ‘WinSCPnet, Version=1.2.10.6257 appeared first on MyLifeIsMyMessage.net.

There can be many reasons for the python error “module not found”.

In my case, I had a laptop with 2.7 and 3.6 both installed.? ?My windows path contained the 2.7 release, but I was running the 3.6 release.? So when I ran from the command line by just typing in “python myprogram.py” it worked.? But when I tried in NotePad++, I was using Python 3.6 and getting the “module not found” error. I also needed to run “easy_install” in Python 3 on the modules that were missing (they were installed in Python 2.7 but not 3.6).

I was pleasantly surprised that in Windows 10, it’s now super easy to update the path.? They parse it for you and give you a special screen:

So I changed the three lines that started with C:\Python27 to C:\Python36.

To find the issue, I learned how to show the version of Python in the Python program itself.

import sys

print ("Python Version:" + sys.version)

For 3.6, the results are shown below:

The post Python Module Not Found (different versions of Python) appeared first on MyLifeIsMyMessage.net.

I sometime post my own code here so I can find an example I like in the future, rather than having to search the web. So there’s nothing extraordinary with the program below, just a good example of how to do SQL Server update commands using pypyodbc from Python.

It’s using a relation database (RDS) hosted on Amazon Cloud (AWS). The purpose of this program is as follows:

1) Read a CSV and get the domain name from column 2 (the CSV header was already removed)

2) Check to see if that domain-name exists in my table of domain names (SEOWebSite)

3) If it exists, update the seows_host_id to 4 (which is a foreign key to a table that specifies where the domain is hosted)

4) If it doesn’t exist, add it with some default values for various foreign keys.

import pypyodbc

import sys

print ("Python Version:" + sys.version)

connection = pypyodbc.connect('Driver={SQL Server};'

'Server=mydb.x000joynbxx.us-west-2.rds.amazonaws.com;'

'Database=demodb;'

'uid=myuser;pwd=mypass')

print ("Connected")

cursor = connection.cursor()

# read each line of file

filenameContainingDomainNames = "c:/Users/nwalt/OneDrive/Documents/SEOMonitor/EBN Blogs Export_NoHeader.csv"

with open(filenameContainingDomainNames) as f:

for line in f:

fixLine = line.replace('\n', ' ').replace('\r', '')

print ("===============================================")

print (fixLine)

columns = fixLine.split(",")

domainName = columns[1]

print ("domainName=" + domainName)

cursor.execute(

"SELECT seows_name FROM SEOWebSite WHERE seows_name = ?", [domainName])

# gets the number of rows affected by the command executed

row_count = cursor.rowcount

print("number of matching rows: {}".format(row_count))

if row_count == 0:

print ("It Does Not Exist")

SQLCommand = ("INSERT INTO SEOWebSite "

"(seows_userid, seows_name, seows_is_monetized, seows_is_pbn, seows_registrar_id, seows_host_id) "

"VALUES (?,?,?,?,?,?)")

Values = [1,domainName,False,True,4,4]

cursor.execute(SQLCommand,Values)

connection.commit()

print ("Data Stored:" + domainName)

else:

print ("Updating to EBN for domain=" + domainName)

SQLCommand = "UPDATE SEOWebSite set seows_host_id = 4 where seows_name = ?";

Values = [domainName]

cursor.execute(SQLCommand,Values)

connection.commit()

connection.close()

print ("Connection closed")

One annoying thing is that the rowCount seemed to be displayed as -1 or 0, one less than what I expected.

I didn’t need to fix it, but maybe next time would try this instead:

The post Simple Example Python Update SQL Database from CSV with pypyodbc appeared first on MyLifeIsMyMessage.net.

Today, with the popularity of GitHub, many people are using MarkDown files to create their documentation.

While there are plug-ins for Chrome I have yet to try, I found this cool site today: https://stackedit.io/editor.

Left side is where you paste the .md contents, right side is the formatted results:

Doesn’t that look a lot better than viewing it in Notepad?

To use it, just copy/paste the content of the “.md” markup file into the left side of StackEdit.IO and it formats it on the left, including the retrieval of the pictures. But note, any relative link might not work.

The post Online web-based viewer (and editor) for MD (markdown files) appeared first on MyLifeIsMyMessage.net.

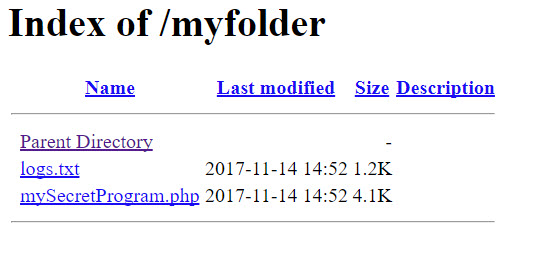

Suppose someone knows a folder name, and tries to list a folder on your site to find out what’s inside of it. Without any protection, it might display like this in the browser:

Now, let’s assume that you don’t want people snooping around in this folder, and trying to run the various PHP programs or investigate the contents of any text files.

The solution is to create an small index.php file that returns nothing, such as this:

<?php // Silence is golden. // or display some message with an echo command: // echo "Cannot be displayed"; ?>

When someone puts the folder name without a filename after it, Apache will generally attempt to run the index.php of that directory.

You don’t need to change .htaccess or the CPANEL indexing options if you do this.

The post On Cpanel/WordPress/PHP Site – Keep Directory Listing from occuring appeared first on MyLifeIsMyMessage.net.

Both Alexa and the Google Home device support Speech Synthesis Markup Language (SSML).

For Alexa – see this SSML documentation, and for Google Home/DialogFlow, see this SSML documentation. SSML was created by the W3C’s Voice Browser working group.

Even though the Alexa doc says you need to wrap the SSML in the <speech> tag, I never used it there. Perhaps this is because they have a separate flag that states you are using SSML, as shown in this example:

"outputSpeech": {

"type": "SSML",

"ssml": "<speak>This output speech uses SSML.</speak>"

}

Actually, the more probable reason is that I’m using the alexa-sdk for JSON, and I think it treats all speech output as SSML.

The main features of SSML that I’ve used are 1) playing short mp3 sound files, and 2) adding pauses to make some of the phrases sound more natural. But you can also change the pitch, speed, and do other cool and interesting things with SSML.

Unfortunately the testing tool in DialogFlow will not read or pronounce the SSML. To get that to work you have to use the “Action Simulator” (https://console.actions.google.com/). (Of course, you have to create your Hebrew Vocabulary for Alexa app. It re-purposes some of the 2700 mp3 files that I created for my earlier Hebrew products. Now I was trying to test my first sample program on the with the Google Actions of DialogFlow. I’m waiting on my actual “Google Home Smart Speaker” to arrive later this week. So I wanted to test the SSML online somehow. DialogFlow has a “play” button (clickable word) by the text, but when you click it, it will only read the text inside the <audio> tags rather than playing the mp3 file. That text is there so you can provide your own error message (for example, the mp3 is not formatted properly, or is missing.) Notice that it also did not show the full audio “src” filename.

Below is an example of a couple of my tests. I had it programmed with an intent called “test word #”, where # refers to an item in an array of Hebrew words that I have in a JSON file. You can see how word 300 didn’t exist, and how 400 did.

Before I wrapped my response with the <speech> tag, it was ignoring the SSML, and reading the less than, greater than sign, and everything in the phrase. Notice the last two entries by my Google profile picture. One shows the audio/SSML, and that was before I used the <speech> tag. I just changed my JSON code, and re-uploaded it (I’m running from an AWS Lambda function as a ‘webhook’). I repeated the exact same intent (“test word 400”) and this time it provided the spoken response. I didn’t have to click anything, it just said the proper response.

Alexa let’s you test SSML online in a somewhat round-about way. You have to go Developer.Amazon.com, and create your Alexa Skill. You have to at least create a dummy skill to test use the SSML “Voice Simulator”, because it’s found on the left “Test” tab under your skill. (Note: The “test tab” disappears after you submit your Alexa Skill for certification.)

I’m also offering my Voice App – Google Home and http://irvingseoexpert.com/services/alexa-skill-development-dallas/>Alexa developer services. Check out the link for details.

The post Testing SSML on Alexa vs Google Home/DialogFlow appeared first on MyLifeIsMyMessage.net.

re:invent 2017 – AWS Conference – Live Stream Notes – 11/29/2017

“The Cloud is the New Normal” – Andy Jassy – CEO Amazon Web Services

10:17 Expedia CEO –

Expedia – 22,000 employees and and ?? billion in sales

Most global diversified travel platform in the world.

55 million phone calls/year

Started as a small division inside Microsoft.

Rewrote every line of code, retired 10 millionth line of C# code.

We consider ourselves “Serial Re-inventors”.

80% of mission critical apps will move to AWS.

2000 deploys per day?

3 Reasons: Resiliency, Optimization, Performance

Acquired Travelocity and migrated to cloud. ($280 million acquisition in 2015)

More instance families than anyone else, because different app/dev’s have different constraints.

Announced ‘bare metal’ instances last night.

Elastic GPU, add a “little GPU”.

Containers – Elastic Container Service – ECS

people don’t just want container – orchestration and management – same capabilities of EC2, deeply integrated with other Amazon services, scales in a broader way… , over 100K active containers running at any one point,

people becoming interested in Kubernetes – people were running on AWS, but today new service EKS – Elastic Container Services for Kubernetes.

Announcing AWS Fargate – run containers without managing servers!

Serverless / Lambda –

Aurora – fastest growing service in the history of AWS; sacle out up to 15 read replicas in 3 availability zones, autoscale available,

Oracle doubled price to run Oracle on AWS, who does that?

Preview Aurora scale out for both reads and writes (formerly scaled out for reads only). Failover in 100 milliseconds.

1st relational database to scale out to multiple data centers. Single region multi-master today, multi-region coming next year.

Preview of Aurora Serverless – so you pay only for what you use! On demand – autoscaling serverless Aurora. Pay only by the second when DB is being used.

Relational database tend to break down when you have PetaBytes or ExaBytes; and that is why they build DynamoDB.

July this year – was “Prime day” at Amazon.com, processed 3.34 Trillion Requests, 12.9 million requests per second.

Announcing Dynamo DB Global Tables – the first fully managed, multi-master, multi-region database in the world.

Announcing Launch of Dynamo DB Backup and Restore – only cloud database to proviide on demand, continuous backups. Available today, and point-in-time restore coming in 2018.

Announcing: Amazon Neptune – fully managed graph database, Support Apache TinkerPop (tinkerpop.apache.org) and WSC RDF graph models; fast and scaleable, reliable… Supports both Gremlin and Sparql.

Modern companies use multiple types of database, often in a single application. AWS had breadth and width of selection.

Amazon S3 is the most popular choice for “Data Lakes”.

Analytics Services:

Athena – for unprocess data such as logs

16 management framework for Big Data (like EMS/Hadoop, etc…)

RedShift – something like a DataWarehouse

Elastic Search – real time dashboards

Kinesis – Real time processing streaming data

QuickSight – Business Intelligence https://quicksight.aws/

ETL – has not existing in the cloud until they launched “GLUE” about a year ago. https://aws.amazon.com/glue/

11:07 Second customer speaker: Goldman Sachs – Analytics Users – Managing Director Roy Joseph

Has been around since 1869, almost 160 years.

1 out of 4 employees is an engineer, 1.5 billion lines of code, 700 applications.

2000 Servers in Cloud Environment.

Three clinet centric innovation (applications)

1) Marcus – over 2 billion in small loans, app written in a year.

2) Marquee – access to risk and pricing platform

3) Symphony – Secure messaging and collaboration

Obstacles to overcome

1) Control of environment

2) Data privacy (the real show stopper!)

Was not an “easy sell internally”. Need an industry leader, builds new business, track record of innvovation… chose AWS.

For them, AWS created B.Y.O. Key – Bring Your Own Key Architecture. Rolled out in August last year as part of KMS (Key Management Solution) https://aws.amazon.com/blogs/aws/new-bring-your-own-keys-with-aws-key-management-service/

Includes:

1) Anomalous behavior detection

2)

3)

Apply the “trust but verify” model to key management.

11:18

Announcing “S3 Select” – allows you to pull out only the data and objects you need from S3.

Reduce cost of apps querying S3, filter data using standard SQL selections. Sometimes up to 400% savings!

Presto Query –

http://www.tpc.org/tpcds/ TPC-DS used for benchmarks.

Can we further extend the data lake?

Glacier – has been hard to query, hard to pull out just what you want (as above).

Announcing: “Glacier Select” – similar to “S3 Select” above (at standard or expedite sped or both, depending on your needs).

11:23 House band and Eric Clapton

Machine Learning – should not be so black box, cryptic…

The hype and the hope here is tremendous.

“Machine learning” is the buzzword dejure.

Amazon recommendations – people may like this product – all fueled by Machine Learning

(also the Prime Air drones, speech recognition and Alexa).

It’s still early for most customers.

Three layers of the stack:

1) For expert machine learning practitioners – accustomed to building/tuning models themselves on P2/P3 instances.

With Machine Lanauge – The one constant is change

More Tenser Flow on Amazon than anywhere else, but maybe Caffe2 is the chocie, or MXNet is the best.

We will support them all. (Torch, Keras, …)

Released “Gluon” to build neural networks that dynamically flex.

Gluon is a new open source library by AWS and Microsoft: https://aws.amazon.com/blogs/aws/introducing-gluon-a-new-library-for-machine-learning-from-aws-and-microsoft/

There aren’t that many ML experts in the world yet.

ML is still to complicated formost developers. Even small models consume a lot of compute time. Then it has to be tuned (quite difficult, up to millions of parameters). Developers throw up their hands in frustration. The lifting is “too-heavy”.

Original vision statement for AWS – allow any person at any company have access to same tools as the largest companies in the world.

Introducing: Amazon Sagemaker – easily build, train and deploy machine learning models.

Create your own algorithms or use built-in.

8 of 10 run two times faster than anywhere else, and 2 of them run 3 times faster.

Training is easier any “Sagemaker” than anywhere else, specify location of data in S3, and EC2 instance type; it spins it up, then when done tears down cluster. Do you change data for the model, or how to choose hyper-parameters?

Tuning parameters has been highly random. Hyper-parameter-optimization (HPO) is done for you; it spins up multiple copies of your model, then using machine learning to inform the machine learning model. This can be 1000s or even millions of parameters. You can pick some options, and do one-click of your model to production.

11:42 Announcing “DeepLens” – for “Deep Learning – High definition camera with on-bar compute, optimized, has “green grass” in it (can us Lambda triggers). For example, if it recognizes license plate, could open garage door. Can send you alert when dog jumps on the couch.

11:43 Guest Speaker Dr. Matt Wood – GM Artificial Intelligence at AWS

Example: Music recommendation service, optimize it, deploy it, then make recommendations. Then a little fun with Deep Lens.

Unlikely you get the best model the first time. You have to tune. HPO (above) is the automatic tuning to improve accuracy then re-test it. Interactively picks interesting features of each model, and keeps improving accuracy; then selects best performing monitor. Single click to fully managed multi-Availabity Zone cluster.

What can you do? Train dozens and dozens of more models, gets addictive quickly. Let your imaginations run wild!

Experimenting is the best way to learn and improve your skills. Creating AWS Deep Lens – first deep learning wireless video camera, to help developers learn and test their skills.

Taking orders for the camera starting today!

11:53 Amazon Rekognition – Search, analyze, and organize millions of images.

By picture matching, several victims of human trafficing have been recovered using this technology.

Can detect smiles, frowns, celebrity recogntion, in-appropriate content.

People asked – can you do the same thing for video? Harder – because it deals with time and motion, and needs context of previous and next frames.

Announcing – “Amazon Rekognition Video” – “Video in, People, activities, and details out.”

Includes “people tracking” (using technology called skeleton modeling) to know if person is in frame or not.

It handles “real time” video as well. Service will continue to get better every month.

People were asking, how do we get our data and videos into the cloud?

Announcing – “Kinesis Video Streams” – Securely ingest and store video, audio, and other time encoded data (like RADAR).

How about language? Last year launched both Polly and Lex, but so many other things users want to do with language.

Hard to search audio, so have traditionally converted audio to text. Since it is expensive, people only pick out the most important things.

Announcing – “Amazon Transcribe” – long form automatic speech recognition, analyzes any .wav or .mp3.

Can be used for call logs, meetings, starting with English, but other languages coming in months to come.

Machine learnings adds punctuation and grammatic formatting, along with timestamps. Supports even “poor” audio, like phones and lower bit-rate audio. Coming soon: Will be able to distinguish between multiple speakers, and can add your own custom libraries and vocabularies. Then you want to take transcribed text, and translate it into other languages.

Announcing – “Amazon Translate” – automatically translates text between languages. Great for uses cases that require real-time translation (customer support, online media). Coming soon: recognize source language on the fly.

Announcing “Amazong Comprehend” – full natural language processing service. Discover valuable insights from text.

Four categories: 1) Entities, 2) Key phrases, 3) Language, 4) Sentiment

Can do “topic modeling” – look at thousands or millions of documents/articles. Can be used to group documents, or how to show them to your customers. Can do ?? documents for $1.80. (missed the number).

12;12 How NFL is using Machine Learning – Michelle McKeena_Doyle, SVP and CIO, National Football League

Largest sports club in the world.

In 1 week of games – 3 terabytes of data is collected.

Next Gen Stats Platform – giving broadcasters details stats about real-time speed, movements, distance a player has moved… Will also be available for post-game analysis. AWS is now an official technology partner with NFL and Next Gen Stats.

All this will enhance the “fan experience.” Sensors are placed on each player to provide this data.

For example, can compute Quarterback Efficiency. Can take into account field condition and weather, things that the human mind may not be able to correlate. Can look at why a play was successful and how it can be quantified.

What the offensive formation is “pre-snap”? What the defending team did? Takes key identifiers, when ball was snapped, when it passed, when it was caught, and when the tackle was made. Why? To get new insights? Show probabilities of success to various teammates. Mid play – catch probabilities are now available. Can see how good the decision making was for the quarterback.

12:24 House Band – Tom Petty 1981 song “The Waiting”

The Next phase of IOT – The Internet of Things –

Trying to get data to edge devices is hard and painful.

Examples: Genome sequencing, John Deer tractors sending real time data to AWS and back to farmers, sensor data from cars, updating maps…

Device manufacturers are exciting about getting data from their own assets.

Frontier 1: Getting into the Game

Example: Want to allow you as a customer, when you want dishes to be picked up from room service, push a button. Simple straight forward Lambda triggers they want to get into IOT with.

Announcing: “AWS IoT 1-Click” – creates Lambda trigger for you.

Frontier 2: Device Management – 1) onboard and provision fleets, 2) monitor and query devices, 3) implement corrective action.

Announcing: “AWS IoT Device Management” – helps onboard/deploy devices in bulk, example 1000s of light bulbs to multiple locations in one click, manufacturer, serial #, firmware, and query on those dimensions (to determine where to troubleshoot). Do updates over the air on part or all of your fleet of devices.

Frontier 3: IoT Security –

existing “IoT Core” helps with security of individual device, but now

Announcing: “AWS IoT Device Defenders” – define security policy for fleets of devices.

1) Monitors fleet of device for policies and best practices (auditing, example not sharing certificates across devices)

2) Monitor device behavior –

3) Identify anomalies and out of norm behavior

Frontier 4: Analytics – has been hard to make sense of it, but customers want to do it.

Announcing: “AWS IoT Analytics ” – cleans, processes, stores IOT device data.

Define an anlystics channel, select data to store, ingest it, configure it enrich or transform the data. It also needs other data to make it useful, e.g. a vineyard might need humidity of the soil, but need to know predicted rainfall that day before turning on irrigation. Performs ad hoc queries, sophisticated analysis, and prepares data for machine learning.

Frontier 5: Smaller Devices – take action based on data they are getting; some are big enough or expensive enough to house a CPU. But new ones have a MCU (Micro-Controller Unit), which outweighs the CPU units by 40×1. Like smoke detectors, soap dispensers… are not connected to the cloud.

Announcing: Amazon FreeRTOS – IoT connected operating system for microcontrolled-base edge devices.

Working with Texas Instruments, and other companies…

May not have connectivity to make round trip to cloud, but can connect to a “green grass” device nearby (https://aws.amazon.com/greengrass/), that has Lambda on-board.

Announcing: “GreenGrass ML Inference” – let’s you run Machine Language “at the edge”

No latency of going back to the cloud. Most will use SageMaker to bulid models, send over air to the devices.

After winter comes spring

Are you going to play? Huge penalty for companies that do not.

Amazon AWS released 1300 features this year, an 3.5 per day average.

All your competitors will be using the cloud. It’s a golden age – and we will be there every step of the way to help.

12:46 end

The post AWS re:invent 2017 – Notes from LiveStream – Key Note by Andy Jassy appeared first on MyLifeIsMyMessage.net.

AWS re:invent Live Stream – Werner Keynote 11/30/2017

Werner Vogels is VP and CTO of Amazon (AWS).

Egalitarian Platform – everyone has access to the same storage, analytics, algorithms, etc…

What sets a company aside is the quality of the data they have.

G.E. – You go to bed as a manufacturing company, and you wake up as a data and analytics company.

Every device that draws a current has the possibility of connecting (to the internet).

Can now build neural networks that we can execute in real time (used to be just offline).

Example of a Philippine application where machine learning systems tells farmer how much fertilizer to use and when to apply it. Saved 90% on fertilzer cross and doubled the produce.

Voice Apps – natural way of working with computers.

Announcing: Alexa for Business – fully managed

Get access to your home private skills at work.

Imagine Saying the following:

Alexa, 1) cancel my staff meeting, 2) what meetings do I have today? 3) How many laptops do we have in stock?

Integration with Sales Force, flight information, reimbursement software, HR skills, Splunk skills (error logs),

Wynn Hotel is putting Amazon Echos in every room of their hotel, with private skills to lower blinds, control TV, ask about your room bill, etc… No longer need five different remote controls!

1) Proving and manage shared services

2) Configure conference rooms

3) Enrollers and assign skills

4) Build Custom Skills

11:05 Voice represents the next biggest disruption in computing.

I made a little picture to emphasize this quote:

11:08 Three Operational Planes

1) Admin Plane

2) Control Plane

3) Data Plane

Koala Lumpur Video Streaming Business

11:13 AWS Well-Architected frameowrk – 5 Pillars

1) Oper Excellent

2) Security

3) Reliability

4) Performance

5) Cost Optimization

Lens

1) HPC – High Performance Computing

2) Serverless

Certification

Bootcamps – train others to do well-architected systems

The system you build now is not going to be the the same as it is six months from no2.

Principles:

1) Stop guessing capacity needs

2) Test systems at production scale

3) Automate to make architectural experimentation easier

4) Allow for evolutionary architectures

5) Drive your architecture using data

6) Improve Tough Game Days

1) Identity

2) Detective ontrols

3) Infrastuructre protection

4) Data Protectioin

5) Incident Response

1) Implement a strong identity foundation

2) Enable traceability

3) Apply security at all layers

4) Automate security best practices

5) Protect data in transit and rest

6) Prepare for security events

11:19 Dance like no one is watching, encrypt like everyone is.

Eliminate IAM users, get down to least needed minimal security. Don’t run anything under root account.

Ubiquitous Encryption – use https/TOS (see toolkit)

No excuse anymore to not use encryption

Security is your job, not just the security team. It’s all of our jobs to protect the customers.

The new security team: Operations, Developers,

Pace of Innovation,

11:22 Protection in a CI/CD world – Build servers have CloudTrail enabled, security in the pipeline,

every update to source code is validated, …

Amazon Inspector can scan the software you are deploying.

AWS Config Rules – great tool – monitor, compare how system looks 2 weeks ago with now.

Tracks all changes in your environment.

CloudTrail will log every API call to every service, and put in S3.

11:26 How has development changed?

1) Has to be more security aware

2) More collaborative

3) More Languages

4) More services

5) More mobile

6) Q&A and Operations are deeply integrated

11:28 If you develop in this fast changing environment, you need help.

There is something extra we need to do for you.

Every great platform has a great Integrated Development Environment (IDE).

Announcing: AWS Cloud 9 – a cloud IDE for writing, running, and debugging code. Generally available today.

Speaker: Clare Liguori – Sr Software Engineer

Cloude 9 – can pull up in any browser.

Can change theme, key-binding.

Several languges and syntax highlighters installed.

Can run the code directly in the IDE.

You can see all the Lambda blueprints in the IDE.

Has breakpoints and debugger.

One user can share his Cloud 9 environment to another person, so you can pair program and review code.

You can chat inside the IDE. As one person types, his changes show up on the partners screen.

After testing, you can deploy to Lambda, then run it from Lambda.

11:37 Back to Werner speaking

Deep integration with CodeStar tools (Pipeline, Deploy, CodeCommit, CodeBuild).

Availability, reliability, and resilience in 21st century architectures.

Quote from years ago: Everything will fall all the time. You don’t know when, but it might.

11:53 Nora Jones – Chaos Engineering – from NetFlix

Chaos Experiments – look a lot like unit tests, but can add latency and time between calls.

We call it “experiments” instead of testing; we assume we are resilient from the failures.

Chaos engineering doesn’t replace, but works with unit testing

Worked on Chaos Engineering book (OReilly publishers).

You may have heard of their “chaos monkey”.

1) Graceful Restarts + Degradation

2) Targeted Chaos

3) Cascading Failure

4) Failure Injection

5) ChAP – Chaos Automation Performance?

Not if it fails, but what to do when it fails.

Key Metric for Netflix is: Metric is whether or not you can press “play”;

total is called: SPS – Stream Starts per Second

Smallest fraction of traffic possible to know if chaos experiment is working properly.

They needed 2% signal of actual live traffic/transactions, route 1% into control cluster, and 1% into experiment cluster.

One is the control, and in the other one they add in their points of failure.

Automated Canary Analysis – If things go wrong, they shorten the experiment early; before it renders NetFlix unusable for customers. (then developer can go debug it).

They automated the experiments, and the criticality of them. They run the critical ones more often.

http://www.PrinciplesOfChaos.org

Chaos doesn’t cause problems, it reveals them.

12:05 Back to Werner

Gall’s Law – a complex system that works is invariable found to have evolved from a simple system that worked.

Werner gives example of which “planes” are removed off your plate when you go with various cloud services like hosted RDS.

You should use as many “managed services” as possible to achieve reliability and high availability.

Launched Amazon MQ and Time Sync yesterday.

You can focus just on the business functions you want to write.

The rise of microservices.

Scaling components down to the minimum business logic that has scaling and reliability requirements.

Example, login/security service is used on every page, but business logic differs on each page; so one needs to be scaled at a different level. Decomposing into smaller components… Container Technology is helping with this. Has become the default if you want to build microservices.

12:12 Abby Fuller – Senior Technical Evangelist – expert on containers.

Tons of options (a good thing): ECS, Fargate for ECS, EKS, Fargate for EKS

The power is in the choices.

Monzo – is a mobile only bank in England. Have about 350 microservices.

The idea was that “Highly available Kubernetes was not for the faint of heart.”, so “manage Kubernetes for me”, and let me focus on the application. Containers in production can be hard work; lot’s of moving pieces.

Which brings us to “Fargate” and the future, not a service, but technology to help…

12:20 Start Demo of Fargate in action.

It’s not about how, but how well.

Don’t worry me about setting, tune up, images; just run it. Let’s AWS handle the heavy lifting.

Create systems that will support you in 2020.

12:22 Back to Werner –

Question: So what does your future look like?

Answer: All the code you ever write is business logic.

iRobot – strict costs, they only pay for the robot, they don’t pay for cloud services that come with it.

All their code is serverless, they never have to pay for idle time.

Agero – a company that has devices/software that can detect if vehicle is in an accident – Crash Detection on Lambda

HomeAway – established business – takes in 6 million photos/month. Entirely serverless!

Architectural Principles:

1) State machines

2) Tables != Databases

3) Events as Interface s

4) Encrypted parameter store

What customers are asking for:

1) Language Support

2) Peformance

3) Function concurrency

4) VPC Integration

4 new poerful Featurs for AWS Lambda:

1) API Gateway/VPC Integratoin

2) Concurrency Controls

3) 3GB memory support

4) .NET Core 2.0 support

5) and Go (language)

Making Serverless even more “Less”

1) Getting started

2)

Announcing: AWS Serverless Application Repository – discover, deploy, publish, reuse

12:32 Dr Walter Scott – CTO of and Founder, DigitalGlobe

Deals with images and maps and satellite photos of earth.

A lot of information in these pictures, but it’s a really big plante, i.e. BIG DATA!

80 Terabytes collected every day from their satellites.

Before AWS, had their own data center with 100 Petabytes of images, but it was “stuck in a jail”.

Used the Snowmobile (data center on wheels) to transfer data.

Moved 17 years worth of data in a single cost effective-operations, to two regions in Glacier.

Second challenge – provide on-demand access while still managing cost.

Maybe take last 180 days and do aged-based caching.

But two problems:

1) Was still a big cache

2) Still missed a lot – Only 40% cache hit rate.

We have gone from viewing images, to analyzing images.

Data access is highly variable and diverse, included current and historical data.

They turned into machine learning service (SageMaker) to the caching problem.

Used pictures of villages in Africa to know how many vaccines to prepare and send.

Can we predict where the next access is likely to be, and preload from glacier before it is needed.

Got cache ratio up to 80% and trending up to 90%.

Third problem solved: Analysis – extracting information from 100 Petabytes at scale.

GBDx – Geo Spatial Big Data Platform – Geo Data as a service

takes unstructured imagery and makes it structured and pulls out various features.

GBDx Notebooks, built on the “jupyter” notebook framework.

Geoscape – continuously updated service of all buildings, roads, roof heights, tree canopies, etc… in Australia.

Used for Telecom – as it moves to 5G, which is blocked by things like trees.

Wildfires in 2014, how to evacuate people… Time = Risk to Life.

Geoscape takes out the guess work from the first response workers.

GBDX for Sustainability – challenge – an experiment with purpose – ideas for solutions for UN sustainable development goals, like good health and well-being. DigitalGlobe.com/revinent

12:43 Back to Werner

Machine learning impacts: developer tools, operations, security

Multi-Lingual Social Analytics

737 Flight Simulation – all machine learning, Alexa talks to pilot, gives advice about best actions to take.

trainline – price prediction

Guest speaker – Sensors, machine learnings and real humans – Mati Kochavi – Founder, AGT International and Heed

Tribe of storytellers – endless search for telling stories in a new ways, and today we all join this journey.

Two UFC MMA boxer/fighters (Diakiese vs Barboza) come on page.

Uses terms like: 70 new insights were introduced such as resiliency, aggression index, etc…

in real time with real data.

How to tell the world with the Internet of Things, the story of sports and entertainment.

Starts with data collected form sensors. The floor mat is smart can sense pressure, movement.

The glove is a smart glove with sensor, creates 12 stories… stength, impact, …

World Graph is the representation/model of the world.

It’s not about the statistics of the fight, but the story of the fight.

AI Agent sits on top of the World Graph. One mission, to provide information what you want to know about the sport event, even if you are not there. What is interesting and fun to know how to provide information in a “cool way” to my audience. And different people want to know different things, so can be customized to the relevant people.

The post Notes from AWS re:Invent Live Stream -Werner Vogels – Nov 30, 2017 appeared first on MyLifeIsMyMessage.net.

I made this quick summary of some of the size and limitations and specs for developing Amazon Alexa Skill. Please contact me at Amazon Skill Developer (Dallas, Texas) if you need one developed.

Max 8000 characters of speech when Alexa is speaking based on text.

MP3 – must be bit rate 48kbps, sample rate 16000 Hz, codec version (MPEG Version 2)

https://developer.amazon.com/docs/custom-skills/speech-synthesis-markup-language-ssml-reference.html#h3_converting_mp3

Maximum length of simple “conversational/speech” audio files is 90 seconds.

You can do long audio files of any length, such as podcasts, but that requires a separate programming model.

You can optionally include “cards” which a person can see at:

https://alexa.amazon.com/spa/index.html#cards

Brief explanation of cards here: https://developer.amazon.com/docs/custom-skills/include-a-card-in-your-skills-response.html

In addition to signing up with AWS, you also have to have a login here:

https://developer.amazon.com/edw/home.html#/.

There are two parts to the skill, the skill/vocabulary definition, and the software code.

Logo Images:

You need a small 108×108 PNG and a large 512×512 PNG file for getting certified and putting the skill in the skill store.

If you decide to use “cards”, see above, they have images as well, but the size requirements are different:

small 720w x 480h

large 1200x x 800h

The post Amazon Alexa Skill – Various Size/Limitations appeared first on MyLifeIsMyMessage.net.

It took me a while to find a good utility to square images and make them a certain size. The results come from this confusing documentation page for Image Magic.

convert -define jpeg:size=510x510 Test1.jpg -thumbnail "510x510>" -background white -gravity center -extent "510x510" testResult.jpg

Of course you change the sizes and the background color. The page above also show you how to crop the picture if desired to fit the square image (rather than padding with the background color).

The following command (mogrify) does the exact same for every file in the current directory, and outputs to another directory specified by the -path parameter. (reference: mogrify)

mogrify -define jpeg:size=510x510 -thumbnail "510x510>" -background white -gravity center -extent "510x510" -path ../ImageOutputDir *.jpg

Note: When installing on Windows, be sure to check the box to the left of “Install legacy utilities” so it will install the convert.exe program.

See also: Photo.StackExchange/questions/27137K

See also: Downloads for ImageMagick.

The post Squaring Images with ImageMagick appeared first on MyLifeIsMyMessage.net.

In my previous blog, I talked about how to use ImageMagick to square a file. Now, in this blog I’m taking it one step further.

I have a directory of photos from all over the internet, and I want to post them to a social media site. If the pictures are taller than 510 pixels, I need to square them as discussed in the last blog (and I’m adding a white padding/background). This will keep them from being cut off when I post them to the specific social media site.

Secondly, if the picture is “wide” vs “tall”, then I don’t need to add the background, but I might as well resize it to save upload/posting time. Some pictures downloaded from the internet are very large, so I’m standardizing on resizing them to a width or height of 510, whichever is smaller.

So in the Powershell, here’s what is happening:

1) Get all the files in a directory ($inputPath) and loop through them one at a time

2) Call ImageMagick “identify” to return the size of the file (primarily I need to know it’s width and height)

3) Use RegEx pattern matching to pull the width/height out of the returned string, convert them to numbers, then use compare them

4) If it is a wide picture, call the ImageMagick resize function

5) If it is a tall picture, call the ImageMagick convert function. Technically, I’m converting to a thumbnail, but it’s a very large thumbnail.

For both #4 and #5 in the above steps, the output file is a variable written to the $targetPath directory with the same filename.

cls

$inputPath = "e:\Photos\ToResize\"

$targetPath = "e:\Photos\Resized\"

$files = get-ChildItem $inputPath

foreach ($file in $files)

{

#$output = & magick identify e:\Photos\ToResize\022cca60-a2a9-49b8-964e-b228acf517f3.jpg

# shell out to run the ImageMagick "identify" command

$output = & magick identify $file.FullName

$outFilename = $targetPath + $file.Name

# sample value of $output:

#e:\Photos\ToResize\022cca60-a2a9-49b8-964e-b228acf517f3.jpg JPEG 768x512 768x512+0+0 8-bit sRGB 111420B 0.000u 0:00.000

write-host $output

$pattern = ".* (\d*?)x(\d*?) .*"

#the first parenthese is for capturing the cityState into the $Matches array

#the second parentheses are needed above to look for $ (which is end of line)

#or zip code following the city/state

$isMatch = $output -match $pattern

if ($Matches.Count -gt 0)

{

#$width = $Matches[1];

#$height = $Matches[2];

[int]$width = [convert]::ToInt32($Matches[1]);

[int]$height = [convert]::ToInt32($Matches[2]);

if ($height -gt $width)

{

$orientation = "tall";

# shell out to run the ImageMagick "convert" command

& convert -define jpeg:size=510x510 $file.FullName -thumbnail "510x510>" -background white -gravity center -extent "510x510" $outFilename

}

else

{

$orientation = "wide";

# shell out to run the ImageMagick "convert" command

& convert -resize 510x510^ $file.FullName $outFilename

}

Write-Host ("file=$($file.name) width=$width height=$height orientation=$orientation `n`n");

}

else

{

Write-Host ("No matches");

}

}

The post Windows Powershell Wrapper Script for ImageMagick appeared first on MyLifeIsMyMessage.net.

<code><span class="str">When running NUGET "install-package NEST" (or possibly any other that uses newtonsoft-json), get error: "'Newtonsoft.Json' already has a dependency defined for 'Microsoft.CSharp'" In my case, I was at a client running Visual Studio 2013 and needed to upgrade the version of Nuget. Reference: <a href="https://stackoverflow.com/questions/42045536/how-to-upgrade-nuget-in-visual-studio-2013">https://stackoverflow.com/questions/42045536/how-to-upgrade-nuget-in-visual-studio-2013</a> In Visual Studio: Tools -> Extensions and Updates -> Updates tab -> Visual Studio Gallery. Below is the screen I saw, I clicked the "Update" ubtton next to NuGet, it installed, had to restart Visual Studio, then the tried the original install-package command again and it worked. </span></code>

The post Error: ‘Newtonsoft.Json’ already has a dependency defined for ‘Microsoft.CSharp’ appeared first on MyLifeIsMyMessage.net.

Suppose you are at a new clients, and you want to see quickly all the orchestrations are bound to send and receive ports.

Here’s some code to help you get started with that.

select op.nvcName as 'OrchInternalPortName',

o.nvcName as 'OrchName',

'SendPort' as 'SendOrReceive',

sp.nvcName as 'PortName'

from bts_orchestration_port op

inner join bts_orchestration o on o.nid = op.nOrchestrationID

inner join bts_orchestration_port_binding opb on opb.nOrcPortID = op.nID

inner join bts_sendport sp on sp.nid = opb.nSendPortID

--order by sp.nvcName

union

select op.nvcName as 'OrchInternalPortName',

o.nvcName as 'OrchName',

'ReceivePort' as 'SendOrReceive',

rp.nvcName as 'PortName'

from bts_orchestration_port op

inner join bts_orchestration o on o.nid = op.nOrchestrationID

inner join bts_orchestration_port_binding opb on opb.nOrcPortID = op.nID

inner join bts_receiveport rp on rp.nid = opb.nReceivePortID

order by 'portname'

Sorry, can’t show any actual results at this time.

This is similar but different from a prior SQL xref I posted that ties RecievePorts to SendPorts.

I actually forgot about this, and did the same code again on another day: Xref BizTalk Receive Ports to Orchestrations.

The post BizTalk SQL to XRef Send/Receive Ports to Orchestrations appeared first on MyLifeIsMyMessage.net.

You can turn on message tracking in BizTalk at the Receive Port and Send Port level (as well as various levels in the orchestration).

Here are the views provided by the BizTalk install.

use biztalkDtaDb

--select * from TrackingData

--select 'btsv_Tracking_Fragments', * from btsv_Tracking_Fragments

select 'btsv_Tracking_Parts', * from btsv_Tracking_Parts

select 'btsv_Tracking_Spool', * from btsv_Tracking_Spool

To get the Send/Receive port or more info about the message, you need to join to dtav_MessageFacts. The idea for this join came from sample code here.

SELECT top 200

a.[Event/Direction],

a.[Event/Port],

a.[Event/URL],

CONVERT(VARCHAR(10), a.[Event/Timestamp], 111) as [date],

DATEPART(HOUR,a.[Event/Timestamp]) as [Hour],

imgPart,

imgPropBag

FROM [dbo].[dtav_MessageFacts] a

inner join dbo.btsv_Tracking_Parts b on a.[MessageInstance/InstanceID] = b.uidMessageID

Note: Later I added this line (not shown in picture). You have to use DataLength() instead of Len() to get the size of an image field.

DataLength(imgPart) as Length,

The body of the message is stored in imgPart of the btsv_Tracking_Parts view, but unfortuantely it's in Hex.

The following site is one that can convert the hex to ascii (might also need one to go to Unicode). https://www.rapidtables.com/convert/number/hex-to-ascii.html. Paste the hex data in the top, click convert, and your text will appear in the bottom half. You won't be able to see it all, but you can copy/paste to NotePad++ or some other editor.

While it's nice to use the built-in features of BizTalk when possible, they will typically have limitations compared to custom options. In a few places where I worked, we implemented our own "Trace" that writes data to a SQL trace table. We had our own concept of promoted fields to identify the trace, such as the location where the trace was capture (pipeline, orchestrations, before/after map, etc), a user type key, and a correlation token that can tie together traces across an entire business process.

See also: 3 ways of programmatically extracting a message body from the BizTalk tracking database (Operations DLL, SQL, and WMI). This article explains how Biztalk compresses the data with BTSDBAccessor.dll.

The post Accessing BizTalk Tracking data with SQL Views appeared first on MyLifeIsMyMessage.net.

How do you find the hidden map name in the BiztalkMgmtDB (BizTalk Database)?

If you look at the table names, you will obviously find bt_MapSpec, but it doesn’t contain the map name. The map itself is hidden in the bts_Item table, which you have to join to. I found this on Jeroen Maes Integration Blog. He has a more complex query that finds a map based on the input/output target namespace. He also joins to the bts_Assembly table.

My goal was just to list all maps containing some sequence of letters (such as a customer name or abbreviation).

use BizTalkMgmtDb

select i.name, * from bt_MapSpec m

inner join bts_item i on m.itemid = i.id

where i.Name like '%ABC%' -- just the map name

-- where i.FullName like '%ABC%" -- optionally use the fully qualified name

I’m surprised there the Type column doesn’t seem to be populated with some number that indicates that the bts_Item is a map, or a schema, or whatever.

The post How to Query Maps in Biztalk’s Management Database (BiztalkMgmtDb) appeared first on MyLifeIsMyMessage.net.

Suppose you want to know what BizTalk Orchestrations are bound to a send port.

Perhaps you ran my query to list send ports related to a receive port.

NOTE: Still experimental code; haven’t verified much.

select

rp.nvcName as 'ReceivePort',

op.nvcName as 'OrchPort',

op.nBindingOption,

o.nvcName as 'Orchestration'

--pto.nvcName as 'PortTypeOperation',

from bts_receiveport rp

inner join bts_orchestration_port_binding opb on rp.nID = opb.nReceivePortID

inner join bts_orchestration_port op on op.nID = opb.nOrcPortId --and op.nPortTypeID in (1,9) -- recieve ports only (9 = activating receive)

inner join bts_orchestration o on o.nID = op.nOrchestrationID

--where rp.nvcName like '%ABC%'

See also the query on this page to do similar by message type: https://social.msdn.microsoft.com/Forums/en-US/06080af3-7c2f-4460-8b07-a668eab9df12/relate-ports-and-messagetypes?forum=biztalkgeneral.

The post SQL Query to XRef BizTalk ReceivePorts to Orchestrations appeared first on MyLifeIsMyMessage.net.

When you come to a new client, and have try to fix bugs or figure out how the system works, it helps to have some “forensice” SQL tools. Suppose you want to find all columns in a database, regardless of which table they are (and of course, show the table name in the query results). In my case, I was dealing with PickupDateTime, but the screen was showing two fields, a from and to date/time. So I wanted to look for all columns that start contain both “pickup” and “from” in that order.

Use MyDatabaseName

SELECT t.name AS table_name,

SCHEMA_NAME(schema_id) AS schema_name,

c.name AS column_name

FROM sys.tables AS t

INNER JOIN sys.columns c ON t.OBJECT_ID = c.OBJECT_ID

WHERE c.name LIKE '%pickup%from%'

ORDER BY schema_name, table_name;

Example code came from Dave Pinal’s SQL Blog.

The post T-SQL Query to Find a Given Column Name (or Like-Column) From All Tables in a Database appeared first on MyLifeIsMyMessage.net.

Here is some more forensic/investigative code. Suppose you need to make sure a value gets in a certain column, but you don’t know the system at all.

Maybe there is a trigger or stored proc that sets the column based on some other table/column. This code gives you a list of all the stored procedures, triggers, and functions (and even views) that use that column name (or any string field).

Create table #temp1

(ServerName varchar(64), dbname varchar(64)

,spName varchar(128),ObjectType varchar(32), SearchString varchar(64))

Declare @dbid smallint, @dbname varchar(64), @longstr varchar(5000)

Declare @searhString VARCHAR(250)

set @searhString='OpenDate'

declare db_cursor cursor for

select dbid, [name]

from master..sysdatabases

--where [name] not in ('master', 'model', 'msdb', 'tempdb', 'northwind', 'pubs')

where [name] in ('MyDatabase')

open db_cursor

fetch next from db_cursor into @dbid, @dbname

while (@@fetch_status = 0)

begin

PRINT 'DB='+@dbname

set @longstr = 'Use ' + @dbname + char(13) +

'insert into #temp1 ' + char(13) +

'SELECT @@ServerName, ''' + @dbname + ''', Name

, case when [Type]= ''P'' Then ''Procedure''

when[Type]= ''V'' Then ''View''

when [Type]= ''TF'' Then ''Table-Valued Function''

when [Type]= ''FN'' Then ''Function''

when [Type]= ''TR'' Then ''Trigger''

else [Type]/*''Others''*/

end

, '''+ @searhString +''' FROM [SYS].[SYSCOMMEnTS]

JOIN [SYS].objects ON ID = object_id

WHERE TEXT LIKE ''%' + @searhString + '%'''

exec (@longstr)

fetch next from db_cursor into @dbid, @dbname

end

close db_cursor

deallocate db_cursor

select * from #temp1

Drop table #temp1

Code from: StackOverflow

The post T-SQL – Search all Stored Procs, Triggers, Functions, and Views for Column Name appeared first on MyLifeIsMyMessage.net.

When using SOAPUI to call a BizTalk published ochestration or schema, you might get this error response/XML from SOAP-UI:

Main error text is:

The message could not be processed. This is most likely because

the action ‘http://Neal.Temp.WCFTargetNamespace/Outbound214Service/Send214Request’

is incorrect or because the message contains an invalid or expired security context token or because

there is a mismatch between bindings. The security context token would be invalid if the service aborted

the channel due to inactivity. To prevent the service from aborting idle sessions prematurely increase

the Receive timeout on the service endpoint’s binding

Assuming no security is needed when first testing on your local machine. You can tighten up the security after you get the basic test working.

References: https://adventuresinsidethemessagebox.wordpress.com/2012/10/22/calling-a-wcf-wshttp-service-from-soapui/

The post SOAPUI Security Errors when testing Biztalk Published Schema (or Orchestration) as WebService appeared first on MyLifeIsMyMessage.net.